Introduction

Welcome to the 123s of School Choice! This resource is designed to be a one-stop shop for all the existing research on private school choice in the United States. This year’s edition is updated with the research published since our last edition. We provide information about the scope and purpose of this resource below.

Since the first modern-day voucher program launched in Milwaukee in 1990, researchers have studied private school choice programs. Few American education reforms have been studied as much as choice.1 Even fewer, if any, have such a broad array of possible outcomes for students, schools, taxpayers and families.

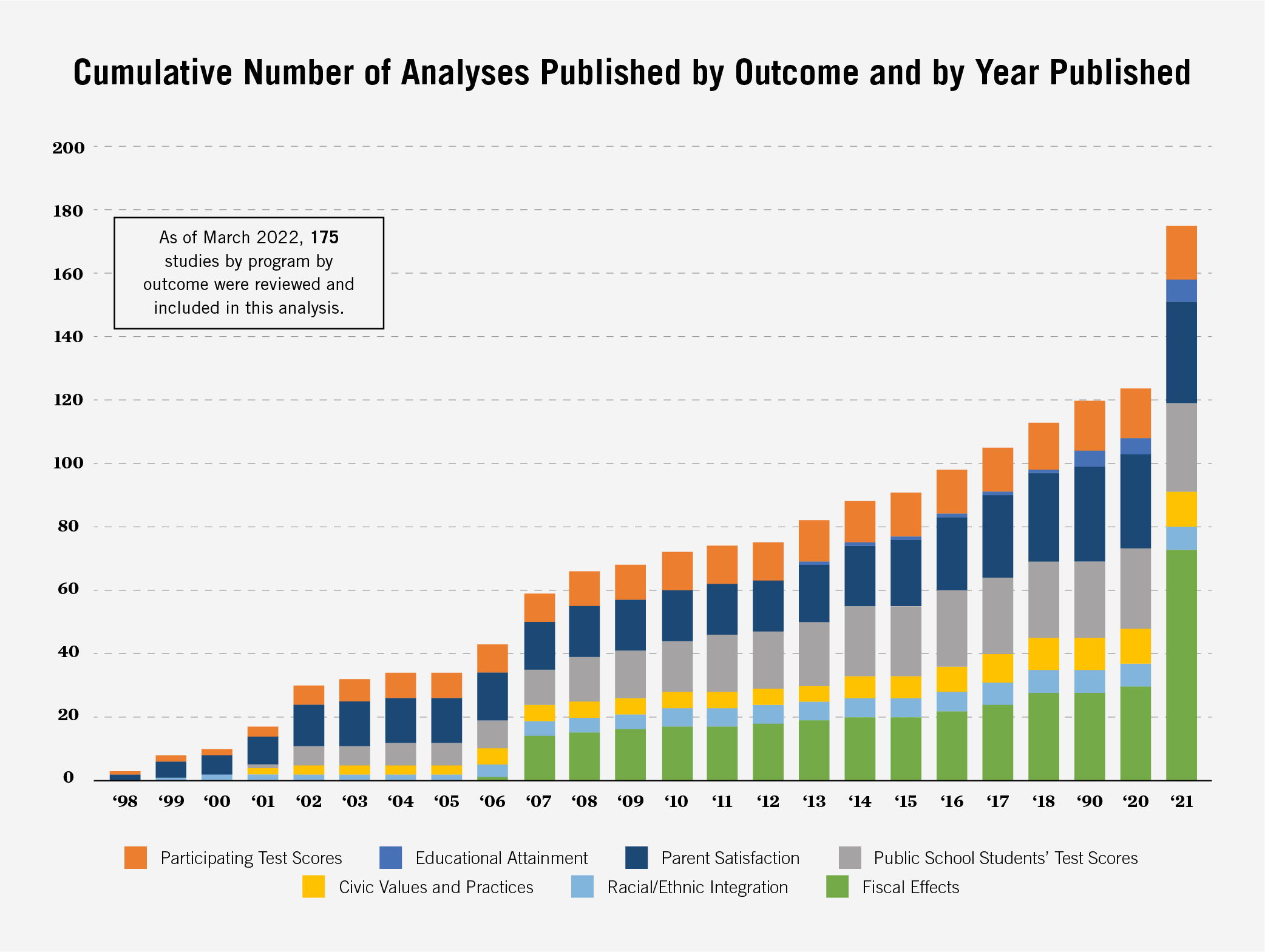

Researchers from across the country have published almost 175 empirical studies on the effectiveness of these programs.

The first set of outcomes we cover are studies of the personal benefits that families can gain from participating in private school choice programs. These include:

- Program Participant Test Scores: These studies examine whether students who receive and/or use scholarships to attend a private school of their choice achieve higher test scores than students who applied for, but did not receive or use scholarships.

- Program Participant Attainment: These studies examine whether school choice programs have an effect on students’ likelihood to graduate high school, enroll in college or attain a college degree.

- Parent Satisfaction: These studies rely on polling and surveys to measure the extent to which parents with children participating in private school choice programs are satisfied with the program.

The second set of outcomes we cover are studies of the benefits that communities and society can gain from these programs. These include:

- Public School Students’ Test Scores: These studies examine whether students who leave public schools by using a private school choice program have an effect on the test scores of students who remain in public schools.

- Civic Values and Practices: These studies examine whether school choice programs have an effect on students’ tolerance for the rights of others, civic knowledge, civic participation, volunteerism, social capital, civic skills, voter registration, voter turnout, and patriotism.

- Racial/Ethnic Integration: These studies examine the effect of private school choice programs on racial and ethnic diversity in schools.

- Fiscal Effects: These studies examine whether school choice programs generate net savings, net costs or are cost-neutral for taxpayers.

Overall Effects Counts for Studies of Private School Choice Programs

| Outcome | Number of Studies | Positive Effect | No Visible Effect | Negative Effect |

|---|---|---|---|---|

| Program Participant Test Scores | 17 | 11 | 4 | 3 |

| Educational Attainment | 7 | 5 | 2 | 0 |

| Parent Satisfaction | 32 | 30 | 1 | 2 |

| Public School Students’ Test Scores | 28 | 25 | 1 | 2 |

| Civic Values and Practices | 11 | 6 | 5 | 0 |

| Integration* | 7 | 6 | 1 | 0 |

| Fiscal Effects | 73 | 68 | 4 | 5 |

*One study employed multiple measures of racial integration and concluded that the effects of the program was overall neutral. We included this study in the “No Visible Effect” column.

Notes: If a study’s analysis produced any positive or negative results or both, we classify those studies as positive, negative or both. Studies that did not produce any statistically significant results for any subgroup are classified as “no visible effect.” The number of effects detected may differ from the number of studies included in the table because we classify one study as having detected both positive and negative effects.

The first edition of The 123s of School Choice, released in 2019, built upon previous EdChoice publications, including the four editions of Greg Forster’s research summary A Win-Win Solution: The Empirical Evidence on School Choice. To identify new studies and to make sure that we hadn’t missed any previously published studies, we enlisted the help of Hanover Research. We asked them to conduct a search for works related to private school choice going back to 1995. Searches were conducted using EBSCO, JSTOR, ProQuest, Google Scholar, and the EconLit, ERIC, and National Bureau of Economic Research databases. Works include peer-reviewed papers in scholarly journals, reports, books, working papers, dissertations, and conference papers and proceedings. The following search terms were used: “school choice,” “ESA,” “school and voucher,” “tax and credit and scholarships,” “tuition and tax and credits,” “education and savings and accounts,” and “education and voucher.” Our review of records from Google Scholar was limited to the first 200 results returned for each search term. We conduct this search on an annual basis. The current edition reflects our systematic search as of March 2021.

As we often state, every study comes with its own caveats. Not all policies are created equal. Evidence from these evaluations tell us something about the design and implementation of these private school choice programs, too. In the case of Louisiana, for example, the program was designed in a way that seemed to generate strong disincentives for private schools to participate. We know this because most private schools in Louisiana chose not to participate in the program. Only one-third of Louisiana private schools signed up, and there is compelling evidence that these were lower-quality private schools. For instance, researchers discovered that schools with higher tuition levels and growing enrollment were less likely to sign up.2 Another study showed private schools that signed up for the program experienced sharp enrollment declines during years prior to entering in the program relative to non-participating private schools.3

It is also true that while test scores provide information that at least some parents care about, they may miss conveying important program effects.4 And of course, parents do not consider test scores the most important schooling outcome.5

With these caveats in mind, please be sure to read past our findings where we discuss contextual issues and some limitations of school choice research. We also cover why we include some studies in our review and not others.

Findings

Click the arrows to flip through our findings below.

What Can Research Tell Us About School Choice?

When it comes to evaluating any public policy, social science is an important, but limited, tool in our toolbox.

The findings of studies, articles, and reports have to be examined not only for their validity but also must be put in the context of values and priorities that exist outside of the realm of measurable and quantifiable. Studies are limited by their sample, their methods, the data available to researchers, and the quality of the outcome measures used to determine impact. If the sample is too limited, the data too messy, or the outcome measure uncorrelated with what we really care about, a study’s large effect size might not actually be all that meaningful. Studies like this get published all of the time. Careful consumers will dig into them before drawing broad sweeping conclusions.

But even the best designed studies are limited to things that we can measure and count. It is quite challenging to put a number on liberty, autonomy, dignity, respect, racism, or a host of constructs that we all know exist and are meaningful. Even if an intervention has a positive effect on some measurable outcome, it might violate a principle that supersedes it.

Social science should be used as a torch, not a cudgel. It should help us understand how programs work and how they can work better. As an organization that both creates research related to private school choice and regularly uses it, we think it is important to both summarize the extant literature on the topic and speak frankly about both their strengths and limitations.

Heterogeneity of Treatment

Gertrude Stein wrote “a rose is a rose is a rose” but is it also true that “a voucher is a voucher is a voucher?”6 Not necessarily. No two private school choice programs are alike. They differ across an array of design features, from how they are funded to rules on accountability to eligibility criteria. The Cleveland Scholarship Program, for example is worth $4,650 for elementary students and $6,000 for high school students, while the DC Opportunity Scholarship is $9,022 for elementary school students and $13,534 for high school students. In Louisiana, participating schools have to take the Louisiana state standardized test, in Florida’s Tax Credit Scholarship Program, students must simply take one of several approved nationally-normed standardized tests. Some programs allow schools to apply admissions requirements to students, others do not. Some allow families to “top up” their scholarship, adding their own money to help pay for more expensive schools, others require participating schools to accept the voucher for the full cost of the program. Some programs require students to apply to a school first, and then apply for the voucher while others have students apply for the voucher first and then apply to the school. Some programs are statewide while others are limited to certain geographic areas. Some are limited to low-income students, others are limited to students with special needs. The list goes on.

Any reasonable observer would expect these program differences to affect their impact on the students and schools that participate. When we see different outcomes from different studies, how much is that due to the peculiarities of those particular programs? What peculiarities drive those findings? We don’t yet know.

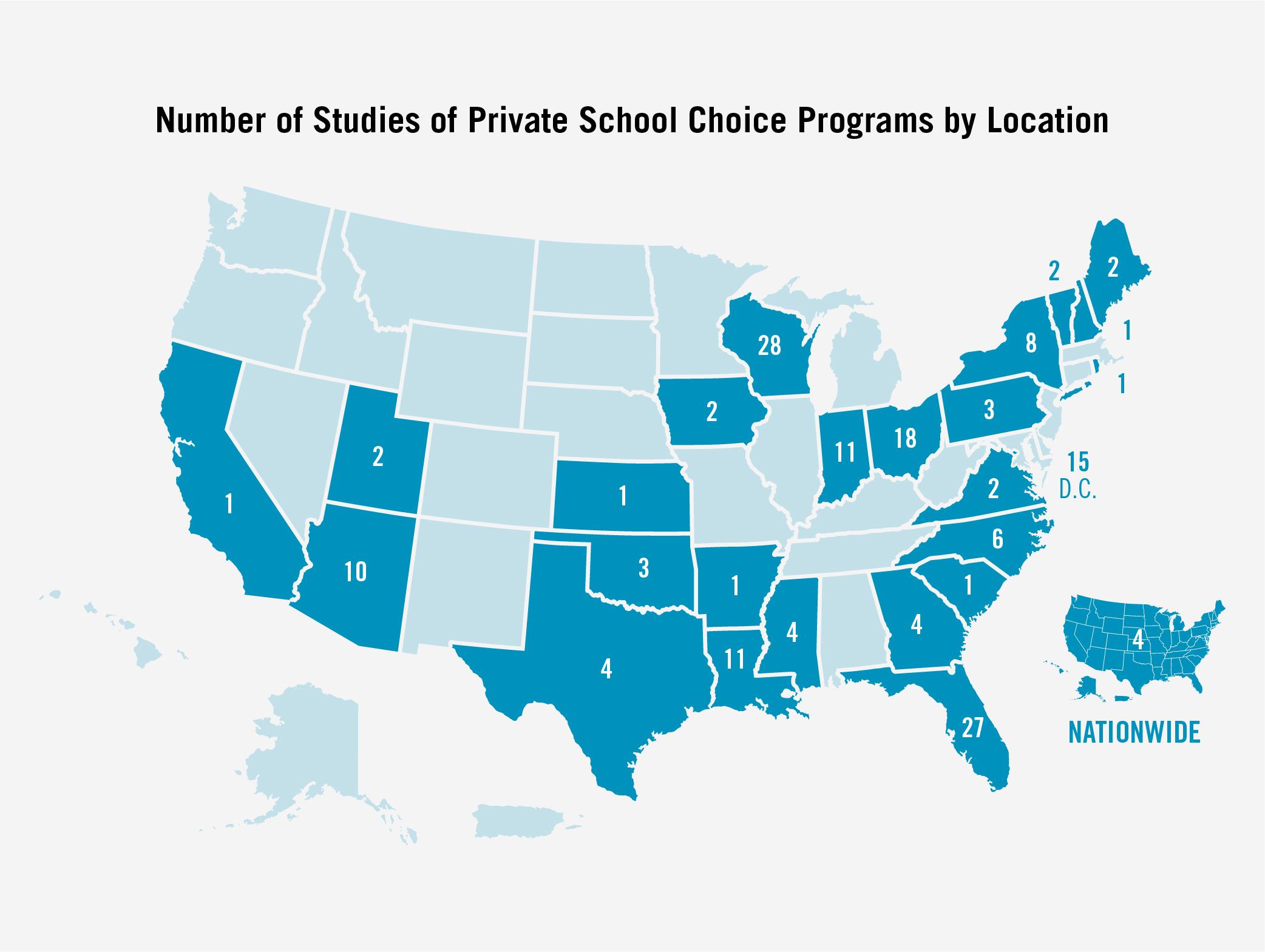

We combine these findings and advise some caution about over-interpretation. The findings of one study limited to one region or of a program that is structured in a particular way might not apply to another potential program in a different place that is structured in a different way. This is why in our summaries we are clear about the geographic location of the studies that we describe, so that readers can understand the context around the findings.

Measures Matter

It is always important to understand what researchers are measuring. Testing is widely implemented across all sectors of schooling, and thus it is unsurprising that a healthy segment of the school choice literature studies programs’ effects on student test scores. But it is important to note that testing is used differently in different education sectors. For most public and charter schools, test scores are part of state accountability systems. They can be rewarded or penalized based on how well students perform. Most private schools do not participate in these systems. If you use a measure that one sector is pushed to maximize by the state and another is not, you might confuse the effect of that pushing with the effectiveness of the school.

Secondly, it is important to note that many private schools specifically eschew state standards and state standardized tests. They argue that those tests do not measure what matters and thus teach their own curriculum aligned to what they feel is most important. If we use the results on the state test to compare these schools, we might yet again confuse the results. The scores of schools that are aligned to the state curriculum might do better, not because they are “better” schools, but simply because they are teaching more explicitly what the state test is measuring.

It is also important to know why parents make choices. If they don’t value test scores, and thus don’t choose schools in an effort to maximize them, we shouldn’t be surprised if test scores are lower in the schools that they choose. Think of it this way. Some folks like big pickup trucks because they want to haul stuff in the bed or tow their boat to the lake on the weekend. They choose based on cargo space and towing capacity. If we measure cars based on fuel efficiency, arguing that better cars are more fuel efficient, it will look like all of these people are making “bad” choices. They aren’t. They are simply choosing on a different dimension.

Finally, it is important to note two papers that documented evidence suggesting a disconnect between test scores and long run outcomes such as educational attainment in school choice program evaluation.7 There are plausible explanations for this disconnect. For instance, differences in test scores among students in public and private schools may simply reflect differences in curricula rather than quality. Long-run outcomes of educational attainment, on the other hand, may yield better proxies for how a private school choice program affected a student’s employment prospects and future earnings. So far there has not been any study to date examining the effect of any private school choice program on outcomes related to earned income or employment.

Why Randomized Control Trial Studies?

One key decision that we make in compiling the studies that are in the participant effects section was to limit the sample to randomized control trials (RCTs). There have been lots of studies of private school choice programs (several of which we reference later) that are not RCTs, and so for this guide we are clear about when we include or exclude non-RCT studies. Most research literatures either have very few RCT studies so far or are simply not conducive to that type of research design.

When evaluating the effect of a private school choice program, we have to ask the key question: “Compared to what?”

A decrease in average graduation rates among students participating in a choice program doesn’t tell us much about the effectiveness of the program. Comparing the change in program participants’ graduation rates with students in public schools is somewhat better, but even this comparison provides limited (and possibly misleading) information about the program’s effectiveness. There may be factors not being accounted for or observed that explain any difference in those outcomes. This fear is particularly acute in school choice research, as seeking out a school choice program evinces a level of motivation that is potentially not present in families that do not apply to such programs. In fact, trying to cope with selection bias is a central methodological issue in estimating the effects of school choice programs.

Ideally, to evaluate the effectiveness of a school choice program, we would compare the change in outcomes between students who use a scholarship with the change in outcomes of an identical group of students (“twins”) who do not participate in the program. Creating a comparison group that provides an “apples-to-apples” comparison is challenging.

The best methodology available to researchers for generating “apples-to-apples” comparisons is a randomized control trial, which researchers also refer to as random assignment studies. These studies are also known as experimental studies and widely considered to be the “gold standard” of research methodology. In fact, the What Works Clearinghouse in the U.S. Department of Education designates RCTs as the only research method that can receive the highest rating, “Meets Group Design Standards Without Reservations” [emphasis added].8

In RCTs, some random process (like a random drawing) is used to assign students to the treatment and control groups. This method is often referred to as the “gold standard” of research methods because the treatment and comparison groups are, on average, identical except for one aspect: one group receives the intervention while the other does not. We can attribute any observed differences in outcomes to the treatment (a causal relationship).

Researchers that conduct RCT studies (also called “random assignment” studies) may report unbiased estimates of effects based on two different comparisons:

- Researchers may report estimates for “intent-to-treat” (ITT) effects, which compares outcomes between students who won the lottery and students who did not win the lottery. ITT is the estimated effect of winning the lottery.

- Researchers may also report “treatment-on-the-treated” (TOT) effects, which compare differences in outcomes between students who attended a private school and students who did not attend private school, regardless of their lottery outcome. TOT is the estimated effect of using the voucher.

When random assignment is not possible, some researchers use statistical techniques to approximate randomization. These studies are sometimes referred to as nonexperimental studies. All research methods, including RCT, have tradeoffs. While RCTs have very high internal validity because of its ability to control for unobservable factors (e.g., student and parent motivation), they do not necessarily provide very high (or low) external validity.

Internal validity is the degree to which the effects we observe can be attributed to the program and not other factors.

External validity is the extent to which results can be generalized to other students in other programs.

In addition to having a high degree of internal validity, another reason we favor RCTs over other methods is that, in the context of evaluating private school choice programs, RCTs occur at the level of the program itself. This is in contrast with RCTs in other education policy areas, such as charter schools. In charter school RCTs, lotteries occur at the school level, meaning that only schools that held lotteries are included in the study. Given that high-quality schools are likely to be in high demand and oversubscribed, results from these studies are likely to be representative of oversubscribed schools, but not necessarily representative of schools that are in low demand. Results from RCT studies of programs where the lottery is held at the program level give us an estimate of the effect of the program rather than just oversubscribed schools.

As you may have seen if you’ve already flipped through this guide, we reported results for studies based on both random assignment (whenever possible) and nonexperimental methods that have some strategy for trying to control for self-selection until 10 random assignment studies based on unique student populations become available.

Multiple Studies of the Same Programs

We include multiple studies of the same program in our review as unique observations. We include them because replication is an integral part of the scientific process for discovering truth. It is important to consider research by different researchers who study the same programs and different students. It is also important to consider reports that employ different rigorous methods. If these efforts arrive at similar conclusions, then we can have more confidence about the effects of a program we observe.

We also took care to avoid unnecessary double counting, as this could lead to one program excessively influencing the results. If an article or paper includes multiple distinct analyses of different private school choice programs, then we counted each of the analyses as distinct studies. We include replication studies by different research teams and studies that use different research methods.

In cases where a team of researchers conduct multiple studies to evaluate a given program over, we include the most recent analysis from the evaluation. We exclude studies that were conducted by the same researchers or research team using the same data.

Why No Effect Sizes?

This guide is a summary of the relevant research on private school choice programs. It is not a meta-analysis of those research areas. Meta-analyses attempt to look at the estimates of program effects from individual studies and combine them to determine an overall average effect across all of the studies. These are difficult and complicated studies to do well. They involve norming the effect sizes to numbers that can be combined with one another and averaged.

That kind of methodology is beyond the scope of our project here. Our goal is to summarize the literature. To do so, we have sacrificed a measure of specificity. We believe that tradeoff is worth making.

In the next section, we cite relevant meta-analyses and systematic reviews that have been conducted on the literature of the particular topics that we explore and provide additional context where needed.

Other Systematic Reviews and Additional Research Context

This section discusses relevant meta-analyses and systematic reviews that have been conducted on the literature of the particular topics that we explore and provide additional context where needed. Greg Forster’s Win-Win reports provides earlier reviews for studies of each of the outcomes included below.

Program Participant Test Scores

Researchers from the University of Arkansas conducted a meta-analysis of the test score effects of private school choice programs globally and estimated the overall effects of these programs on participants’ reading, English and math test scores.9 Students who won the voucher lottery saw small positive but statistically insignificant gains on test scores. Students who won the voucher lottery and used the voucher e larger positive gains on test scores that equate roughly to 49 more days of learning in math and 28 more days of learning in reading and English. Notably, reading and math scores increase the longer a student uses a voucher. Estimates in reading and English for students participating in U.S. voucher programs indicate a small, negative and statistically insignificant average treatment effect in students’ first year in a program. In year four, this effect is positive and statistically significant. The pattern is similar for math, except that year four estimates are positive but remain statistically insignificant.

Two nonexperimental studies on voucher programs in Indiana and Ohio used matching methods to study the effects of the program on math and reading test scores. Both studies found negative math and reading test score effects.10 A longitudinal evaluation of Milwaukee’s voucher program that also used matching methods to study test score effects and found null effects for math and positive effects on reading.11

Although matching may be the best research method available for studying other programs that are not or cannot be oversubscribed, they are not as effective as randomized experiments in controlling for self-selection bias. Given the large number of random assignment studies of the effects of private school choice programs on participant test scores, we are more selective with our methods so that we focus attention on the more rigorously designed studies.

Program Participant Attainment

Lisa Foreman reviewed the academic literature on educational attainment effects on students participating in private school voucher programs and charter schools.12 She found generally positive findings in the studies she reviewed. We do not include one study that was included Foreman’s review because it is an observational study and does not use methods to account for self-selection.

Parent Satisfaction

Evan Rhinesmith conducted a systematic review to synthesize the parent satisfaction literature for private school choice programs. The systematic review reports that participating in private school choice programs leads to higher levels of parent satisfaction. Rhinesmith states, “If methodology is behind the results, we would expect the experimental and observational studies to differ dramatically in their results. They do not. Whether students enrolled in their choice program through lottery or self-sorted into their private school of choice, the results have shown that providing choice in education leads to higher levels of parent satisfaction.”13

Public School Students’ Test Scores

Several systematic reviews have been conducted to synthesize the competitive effects literature for private school choice programs.14 All of these systematic reviews acknowledge that private school choice programs tend to induce public schools to improve. The body of evidence suggests that improvement increases with the intensity of competition.

Civic Values and Practices

Corey DeAngelis published a systematic review of the civic effects of school choice programs in 2017. While others have compiled civic outcomes research of other types of schooling, including charter schools, DeAngelis’s review exclusively examines private school choice. He found generally null to positive results of private school choice programs on students’ tolerance, null to positive results for civic engagement, and positive results for social order. For social order, the author reviewed studies that examine the levels of criminal activity of school choice participants.15

Racial/Ethnic Integration

Elise Swanson surveyed the literature on the effects of various school choice sectors (magnet, charters, and private) on integration in schools. In her review of studies on voucher programs, she reviewed eight studies, finding that seven studies found voucher programs improved school integration and one study was unable to detect any effects. She notes that “it is perhaps unsurprising that traditional public schools exhibit, to this day, high levels of racial segregation, and that choice programs, including vouchers, that decouple the link between address and school actually increase racial integration.”16

Studies Included in Review

Program Participant Test Scores

Heidi H. Erickson, Jonathan N. Mills and Patrick J. Wolf (2021): The Effects of the Louisiana Scholarship Program on Student Achievement and College Entrance, Journal of Research on Educational Effectiveness. Retrieved from: https://doi.org/10.1080/19345747.2021.1938311

Ann Webber, Ning Rui, Roberta Garrison-Mogren, Robert B. Olsen, and Babette Gutmann (2019), Evaluation of the DC Opportunity Scholarship Program: Impacts Three Years After Students Applied (NCEE 2019-4006), retrieved from Institute of Education Sciences website: https://ies.ed.gov/ncee/pubs/20194006/pdf/20194006.pdf

Atila Abdulkadiroglu, Parag A. Pathak, and Christopher R. Walters (2018). Free to Choose: Can School Choice Reduce Student Achievement? American Economic Journal: Applied Economics, 10(1), pp. 175–206. https://dx.doi.org/10.1257/app.20160634

Marianne Bitler, Thurston Domina, Emily Penner, and Hilary Hoynes (2015). Distributional Analysis in Educational Evaluation: A Case Study from the New York City Voucher Program. Journal of Research on Educational Effectiveness, 8(3), pp. 419–450. https://dx.doi.org/10.1080/19345747.2014.921259

Patrick J. Wolf, Brian Kisida, Babette Gutmann, Michael Puma, Nada Eissa, and Lou Rizo (2013). School Vouchers and Student Outcomes: Experimental Evidence from Washington, D.C. Journal of Policy Analysis and Management, 32(2), pp. 246–270. https://dx.doi.org/10.1002/pam.21691

Hui Jin, John Barnard, and Donald Rubin (2010). A Modified General Location Model for Noncompliance with Missing Data: Revisiting the New York City School Choice Scholarship Program using Principal Stratification. Journal of Educational and Behavioral Statistics, 35(2), pp. 154–173. https://dx.doi.org/10.3102/1076998609346968

Joshua Cowen (2008). School Choice as a Latent Variable: Estimating the “Complier Average Causal Effect” of Vouchers in Charlotte. Policy Studies Journal, 36(2), pp. 301–315. https://dx.doi.org/10.1111/j.1541-0072.2008.00268.x

Carlos Lamarche (2008). Private school vouchers and student achievement: A fixed effects quantile regression evaluation, Labour Economics, 15(4), pp. 575-590, https://doi.org/10.1016/j.labeco.2008.04.007

Eric Bettinger and Robert Slonim (2006). Using Experimental Economics to Measure the Effects of a Natural Educational Experiment on Altruism. Journal of Public Economics, 90(8–9), pp. 1625–1648. https://dx.doi.org/ 10.1016/j.jpubeco.2005.10.006

Alan Krueger and Pei Zhu (2004). Another Look at the New York City School Voucher Experiment. American Behavioral Scientist, 47(5), pp. 658–698. https://dx.doi.org/10.1177/0002764203260152

John Barnard, Constantine Frangakis, Jennifer Hill, and Donald Rubin (2003). Principal Stratification Approach to Broken Randomized Experiments: A Case Study of School Choice Vouchers in New York City. Journal of the American Statistical Association, 98(462), pp. 310–326. https://dx.doi.org/10.1198/016214503000071

William G. Howell, Patrick J. Wolf, David E. Campbell, and Paul E. Peterson (2002). School Vouchers and Academic Performance: Results from Three Randomized Field Trials. Journal of Policy Analysis and Management, 21(2), pp. 191–217. https://dx.doi.org/10.1002/pam.10023

Jay P. Greene (2001). Vouchers in Charlotte. Education Matters, 1(2), pp. 55–60. Retrieved from Education Next website: http://educationnext.org/files/ednext20012_46b.pdf

Jay P. Greene, Paul Peterson, and Jiangtao Du (1999). Effectiveness of School Choice: The Milwaukee Experiment. Education and Urban Society, 31(2), pp. 190–213. https://dx.doi.org/10.1177/0013124599031002005

Cecilia E. Rouse (1998). Private School Vouchers and Student Achievement: An Evaluation of the Milwaukee Parental Choice Program. Quarterly Journal of Economics, 113(2), pp. 553–602. https://dx.doi.org/10.1162/003355398555685

Program Participant Attainment

Megan J. Austin and Max Pardo (2021). Do college and career readiness and early college success in Indiana vary depending on whether students attend public, charter, or private voucher high schools? (REL 2021–071). U.S. Department of Education, Institute of Education Sciences, National Center for Education Evaluation and Regional Assistance, Regional Educational Laboratory Midwest. Retrieved from: http://ies.ed.gov/ncee/edlabs

Heidi H. Erickson, Jonathan N. Mills and Patrick J. Wolf (2021): The Effects of the Louisiana Scholarship Program on Student Achievement and College Entrance, Journal of Research on Educational Effectiveness. Retrieved from: https://doi.org/10.1080/19345747.2021.1938311

Albert Cheng and Paul E. Peterson (2020), Experimentally Estimated Impacts of School Vouchers on Educational Attainments of Moderately and Severely Disadvantaged Students (PEPG 20-02). Retrieved from Harvard University website: https://www.hks.harvard.edu/sites/default/files/Taubman/PEPG/research/PEPG20_02.pdf

** This paper is an update to the analysis from: Matthew M. Chingos and Paul E. Peterson (2015). Experimentally Estimated Impacts of School Vouchers on College Enrollment and Degree Attainment. Journal of Public Economics, 122, pp. 1–12. https://dx.doi.org/10.1016/j.jpubeco.2014.11.013

Matthew M. Chingos, Daniel Kuehn, Tomas Monarrez, Patrick J. Wolf, John F. Witte, and Brian Kisida (2019). The Effects of Means-Tested Private School Choice Programs on College Enrollment and Graduation, retrieved from Urban Institute website: https://www.urban.org/sites/default/files/publication/100665/the_effects_of_means-tested_private_school_choice_programs_on_college_enrollment_and_graduation_2.pdf

Patrick J. Wolf, Brian Kisida, Babette Gutmann, Michael Puma, Nada Eissa, and Lou Rizo (2013). School Vouchers and Student Outcomes: Experimental Evidence from Washington, DC. Journal of Policy Analysis and Management, 32(2), pp. 246–270. http://dx.doi.org/10.1002/pam.21691

Parent Satisfaction

Andrew D. Catt and Albert Cheng (2022). Families’ Experiences on the New Frontier of Educational Choice: Findings from a Survey of K–12 Parents in Arizona. Retrieved from EdChoice website: https://www.edchoice.org/wp-content/uploads/2019/05/2019-4-Arizona-Parent-Survey-by-Andrew-Catt-and-Albert-Chang.pdf

Shannon Varga et al. (2021), Choices and Challenges: Florida Parents’ Experiences with the State’s McKay and Gardiner Scholarship Programs for Students with Disabilities, Boston University, CERES Institute for Children & Youth. Retrieved from: https://ceresinstitute.org/wp-content/uploads/2021/04/ChoicesChallenges_REPORT.pdf

Legislative Audit Bureau (2017). Special Needs Scholarship Program (Report 18-6). Retrieved from Wisconsin State Legislature website: https://legis.wisconsin.gov/lab/media/2753/18-6full.pdf

Department of Revenue Administration (2018). Scholarship Organization Report: Giving and Going Alliance. Retrieved from https://www.revenue.nh.gov/quick-links/documents/givingandgoingalliance.PDF; Department of Revenue Administration (2018). Scholarship Organization Report: Children’s Scholarship Fund. Retrieved from https://www.revenue.nh.gov/quick-links/documents/childrens scholarshipfund.PDF

Andrew D. Catt and Evan Rhinesmith (2017). Why Indiana Parents Choose: A Cross-Sector Survey of Parents’ Views in a Robust School Choice Environment. Retrieved from EdChoice website: https://www.edchoice.org/wp-content/uploads/2017/09/Why-Indiana-Parents-Choose-1.pdf

Anna J. Egalite, Ashley Gray, and Trip Stallings (2017). Parent Perspectives: Applicants to North Carolina’s Opportunity Scholarship Program Share Their Experiences (OS Evaluation Report 2). Retrieved from North Carolina State University website: https://ced.ncsu.edu/elphd/wp-content/uploads/sites/2/2017/07/Parent-Perspectives.pdf

Andrew D. Catt and Evan Rhinesmith (2016). Why Parents Choose: A Survey of Private School and School Choice Parents in Indiana. Retrieved from EdChoice website: https://www.edchoice.org/wp-content/uploads/2017/03/Why-Parents-Choose-A-Survey-of-Private-School-and-School-Choice-Parents-in-Indiana-by-Andrew-D.-Catt-and-Evan-Rhinesmith.pdf

Brett Kittredge (2016). The Special Needs ESA: What Families Enrolled in the Program Are Saying After Year One. Retrieved from Empower Mississippi website: http://empowerms.org/wp-content/uploads/2016/12/ESA-Report-final.pdf

Paul DiPerna (2015). Why Indiana Voucher Parents Choose Private Schools. Retrieved from EdChoice website: http://www.edchoice.org/wp-content/uploads/2015/07/Indiana-Survey.pdf

Brian Kisida and Patrick Wolf (2015). Customer Satisfaction and Educational Outcomes: Experimental Impacts of the Market-Based Delivery of Public Education. International Public Management Journal, 18(2), pp. 265–285. https://dx.doi.org/10.1080/10967494.2014.996629

Jonathan Butcher and Jason Bedrick (2013). Schooling Satisfaction: Arizona Parents’ Opinions on Using Education Savings Accounts. Retrieved from EdChoice website: https://www.edchoice.org/wp-content/uploads/2013/10/SCHOOLING-SATISFACTION-Arizona-Parents-Opinions-on-Using-Education-Savings-Accounts-NEW.pdf

James P. Kelly, III, and Benjamin Scafidi (2013). More Than Scores: An Analysis of Why and How Parents Choose Private Schools. Retrieved from EdChoice website: http://www.edchoice.org/wp-content/uploads/2015/07/More-Than-Scores.pdf

John F. Witte, Patrick J. Wolf, Joshua M. Cowen, David J. Fleming, and Juanita Lucas-McLean (2008). MPCP Longitudinal Educational Growth Study: Baseline Report (SCDP Milwaukee Evaluation Report 5). Retrieved from University of Arkansas Department of Education Reform website: http://www.uaedreform.org/downloads/2008/02/report-5-mpcp-longitudinal-educational-growth-study-baseline-report.pdf

Jay P. Greene and Greg Forster (2003). Vouchers for Special Education Students: An Evaluation of Florida’s McKay Scholarship Program (Civic Report 38). Retrieved from Manhattan Institute website: https://media4.manhattan-institute.org/pdf/cr_38.pdf

William G. Howell and Paul E. Peterson (2002). The Education Gap: Vouchers and Urban Schools. Retrieved from https://www.jstor.org/stable/10.7864/j.ctt128086

Jay P. Greene (2001). Vouchers in Charlotte. Education Matters, 1(2), pp. 55–60. Retrieved from Education Next website: https://www.educationnext.org/files/ednext20012_46b.pdf

Paul E. Peterson and David E. Campbell (2001). An Evaluation of the Children’s Scholarship Fund (PEPG 01-01). Retrieved from Harvard University website: https://sites.hks.harvard.edu/pepg/PDF/Papers/CSF%20Report%202001.pdf

Paul E. Peterson, David E. Campbell, and Martin R. West (2001). An Evaluation of the BASIC Fund Scholarship Program in the San Francisco Bay Area, California (PEPG 01-01). Retrieved from Harvard University website: https://sites.hks.harvard.edu/pepg/PDF/Papers/BasicReport.PDF

John F. Witte (2000). The Market Approach to Education: An Analysis of America’s First Voucher Program. Retrieved from https://www.jstor.org/stable/j.ctt7rqnw

Kim K. Metcalf (1999). Evaluation of the Cleveland Scholarship and Tutoring Grant Program: 1996-1999. Retrieved from https://cdm16007.contentdm.oclc.org/digital/collection/p267401ccp2/id/1948

Paul E. Peterson, William G. Howell, and Jay P. Greene (1999). An Evaluation of the Cleveland Voucher Program After Two Years. Retrieved from Harvard University website: https://sites.hks.harvard.edu/pepg/PDF/Papers/clev2ex.pdf

Paul E. Peterson, David Myers, and William G. Howell (1999). An Evaluation of the Horizon Scholarship Program in the Edgewood Independent School district, San Antonio, Texas: The First Year. Retrieved from Harvard University website: https://sites.hks.harvard.edu/pepg/PDF/Papers/edge99.pdf

Jay P. Greene, William G. Howell, and Paul E. Peterson (1998). Lessons from the Cleveland Scholarship Program. In Paul E. Peterson and Bryan C. Hassel (Eds.), Learning from School Choice (pp. 357–392). Retrieved from: https://cpb-usw2.wpmucdn.com/voices.uchicago.edu/dist/5/539/files/2017/05/Lessons -10vatg9.pdfv

David J. Weinschrott and Sally B. Kilgore (1998). Evidence from the Indianapolis Voucher Program. In Paul E. Peterson and Bryan C. Hassel (Eds.), Learning from School Choice (pp. 307–334), Retrieved from https://books.google.com/books?id=138qI-WoYMYC&pg=PA307

Public School Students’ Test Scores

Anna J. Egalite and Jonathan N. Mills (2021). Competitive Impacts of Means-Tested Vouchers on Public School Performance: Evidence from Louisiana, Education Finance and Policy, 16(1), pp. 66-91, retrieved from: https://doi.org/10.1162/edfp_a_00286

Yusuf Canbolat (2021). The long-term effect of competition on public school achievement: Evidence from the Indiana Choice Scholarship Program. Education Policy Analysis Archives, 29(97). https://doi.org/10.14507/epaa.29.6311

David N. Figlio, Cassandra M.D. Hart, and Krzysztof Karbownik (2021). Effects of Scaling Up Private School Choice Programs on Public School Students, Working Paper No. 9056, CESifo, retrieved from: https://www.cesifo.org/en/publikationen/2021/working-paper/effects-scaling-private-school-choice-programs-public-school

Anna J. Egalite and Andrew D. Catt (2020). Competitive Effects of the Indiana Choice Scholarship Program on Traditional Public School Achievement and Graduation Rates (EdChoice working paper 2020-3), retrieved from: https://www.edchoice.org/wp-content/uploads/2020/08/EdChoice-Working-Paper-2020-3-Competitive-Effects-of-the-Indiana-Choice-Scholarship-Program.pdf

David Figlio and Krzysztof Karbownik (2016). Evaluation of Ohio’s EdChoice Scholarship Program: Selection, Competition, and Performance Effects, retrieved from Thomas B. Fordham Institute website: https://fordhaminstitute.org/sites/default/files/publication/pdfs/FORDHAM-Ed-Choice-Evaluation-Report_online-edition.pdf

Nathan L. Gray, John D. Merrifield, and Kerry A. Adzima (2016). A Private Universal Voucher Program’s Effects on Traditional Public Schools. Journal of Economics and Finance, 40(2), pp. 319–344. https://dx.doi.org/10.1007/s12197-014-9309-z

Daniel H. Bowen and Julie R. Trivitt (2014). Stigma Without Sanctions: The (Lack of) Impact of Private School Vouchers on Student Achievement. education policy analysis archives, 22(87). https://dx.doi.org/10.14507/epaa.v22n87.2014

David Figlio and Cassandra M.D. Hart (2014). Competitive Effects of Means-Tested School Vouchers. American Economic Journal: Applied Economics, 6(1), pp. 133–156. https://dx.doi.org/10.1257/app. 6.1.133

Rajashri Chakrabarti (2013). Vouchers, Public School Response, and the Role of Incentives: Evidence from Florida. Economic Inquiry, 51(1), pp. 500–526. https://dx.doi.org/10.1111/j.1465-7295.2012.00455.x

Cecilia E. Rouse, Jane Hannaway, Dan Goldhaber, and David Figlio (2013). Feeling the Florida Heat? How Low-Performing Schools Respond to Voucher and Accountability Pressure. American Economic Journal: Economic Policy, 5(2), pp. 251–281. https://dx.doi.org/10.1257/pol.5.2.251

Matthew Carr (2011). The Impact of Ohio’s EdChoice on Traditional Public School Performance. Cato Journal, 31(2), pp. 257–284. Retrieved from Cato Institute website: http://object.cato.org/sites/cato.org/files/serials/files/cato-journal/2011/5/cj31n2-5.pdf

Marcus A. Winters and Jay P. Greene (2011). Public School Response to Special Education Vouchers: The Impact of Florida’s McKay Scholarship Program on Disability Diagnosis and Student Achievement in Public Schools. Educational Evaluation and Policy Analysis, 33(2), pp. 138–158. https://dx.doi.org/10.3102/0162373711404220

Nicholas S. Mader (2010). School Choice, Competition and Academic Quality: Essays on the Milwaukee Parental Choice Program (Doctoral dissertation). Retrieved from ProQuest (3424049)

Jay P. Greene and Ryan H. Marsh (2009). The Effect of Milwaukee’s Parental Choice Program on Student Achievement in Milwaukee Public Schools (SCDP Comprehensive Longitudinal Evaluation of the Milwaukee Parental Choice Program Report 11). Retrieved from University of Arkansas Department of Education Reform website: http://www.uaedreform.org/downloads/2009/03/report-11-the-effect-of-milwaukees-parental-choice-program-on-student-achievement-in-milwaukee-public-schools.pdf

Rajashri Chakrabarti (2008). Can Increasing Private School Participation and Monetary Loss in a Voucher Program Affect Public School Performance? Evidence from Milwaukee, Journal of Public Economics, 92(5–6), pp. 1371–1393. https://dx.doi.org/10.1016/j.jpubeco.2007.06.009

Greg Forster (2008). Lost Opportunity: An Empirical Analysis of How Vouchers Affected Florida Public Schools. School Choice Issues in the State. Retrieved from EdChoice website: http://www.edchoice.org/wp-content/uploads/2015/09/Lost-Opportunity-How-Vouchers-Affected-Florida-Public-Schools.pdf

Greg Forster (2008). Promising Start: An Empirical Analysis of How EdChoice Vouchers Affect Ohio Public Schools. School Choice Issues in the State. Retrieved from EdChoice website: http://www.edchoice.org/wp-content/uploads/2015/09/Promising-Start-How-EdChoice-Vouchers-Affect-Ohio-Public-Schools.pdf

Martin Carnoy, Frank Adamson, Amita Chudgar, Thomas F. Luschei, and John F. Witte (2007). Vouchers and Public School Performance: A Case Study of the Milwaukee Parental Choice Program. Retrieved from Economic Policy Institute website: https://www.epi.org/publication/book_vouchers

Jay P. Greene and Marcus A. Winters (2007). An Evaluation of the Effect of DC’s Voucher Program on Public School Achievement and Racial Integration After One Year. Journal of Catholic Education, 11(1), pp. 83–101. http://dx.doi.org/10.15365/joce.1101072013

David N. Figlio and Cecilia E. Rouse (2006). Do Accountability and Voucher Threats Improve Low-Performing Schools? Journal of Public Economics, 90(1–2), pp. 239–255. https://dx.doi.org/10.1016/j.jpubeco.2005.08.005

Martin R. West and Paul E. Peterson (2006). The Efficacy of Choice Threats within School Accountability Systems: Results from Legislatively Induced Experiments. Economic Journal, 116(510), pp. C46–C62. http://dx.doi.org/10.1111/j.1468-0297.2006.01075.x

Jay P. Greene and Marcus A. Winters (2004). Competition Passes the Test. Education Next, 4(3), pp. 66–71. Retrieved from https://www.educationnext.org/files/ednext20043_66.pdf

Jay P. Greene and Greg Forster (2002). Rising to the Challenge: The Effect of School Choice on Public Schools in Milwaukee and San Antonio (Civic Bulletin 27). Retrieved from Manhattan Institute website: http://www.manhattan-institute.org/pdf/cb_27.pdf

Christopher Hammons (2002), The Effects of Town Tuitioning in Vermont and Maine. School Choice Issues in Depth. Retrieved from EdChoice website: https://www.edchoice.org/wp-content/uploads/2019/03/The-Effects-of-Town-Tuitioning-in-Vermont-and-Maine.pdf

Caroline M. Hoxby (2002). How School Choice Affects the Achievement of Public School Students. In Paul T. Hill (Ed.), Choice with Equity (pp. 141–78). Retrieved from https://books.google.com/books?id=IeUk3myQu-oC&lpg=PP1&pg=PA141

Jay P. Greene (2001). An Evaluation of the Florida A-Plus Accountability and School Choice Program. Retrieved from Manhattan Institute website: http://www.manhattan-institute.org/pdf/cr_aplus.pdf

Civic Values and Practices

Corey A. DeAngelis and Patrick J. Wolf (2020). Private School Choice and Character: Evidence from Milwaukee, The Journal of Private Enterprise, 35(3), pp. 13-48, retrieved from: http://journal.apee.org/index.php/Parte3_2020_Journal_of_Private_Enterprise_Vol_35_No_3_Fall

Corey A. DeAngelis and Patrick J. Wolf (2018). Will Democracy Endure Private School Choice? The Effect of the Milwaukee Parental Choice Program on Adult Voting Behavior. https://dx.doi.org/10.2139/ssrn.3177517

Deven Carlson, Matthew M. Chingos, and David E. Campbell (2017). The Effect of Private School Vouchers on Political Participation. Journal of Research on Educational Effectiveness, 10(1), pp. 545–569. https://dx.doi.org/10.1080/19345747.2016.1256458

Jonathan N. Mills, Albert Cheng, Collin E. Hitt, Patrick J. Wolf, and Jay P. Greene (2016). Measures of Student Non-Cognitive Skills and Political Tolerance After Two Years of the Louisiana Scholarship Program (Louisiana Scholarship Program Evaluation Report 2). https://dx.doi.org/10.2139/ssrn.2738782

David J. Fleming (2014). Learning from Schools: School Choice, Political Learning, and Policy Feedback. Policy Studies Journal, 42(1), pp.55–78. https://dx.doi.org/10.1111/psj.12042

David J. Fleming, William Mitchell, and Michal McNally (2014). Can Markets Make Citizens? School Vouchers, Political Tolerance, and Civic Engagement. Journal of School Choice, 8(2), pp. 213–236. https://dx.doi.org/10.1080/15582159.2014.905397

David E. Campbell (2008). The Civic Side of School Choice: An Empirical Analysis of Civic Education in Public and Private Schools. Brigham Young University Law Review, 2008(2), pp. 487–523. Retrieved from https://digitalcommons.law.byu.edu/lawreview/vol2008/iss2/11

Eric Bettinger and Robert Slonim (2006). Using Experimental Economics to Measure the Effects of a Natural Educational Experiment on Altruism. Journal of Public Economics, 90(8–9), pp. 1625–1648. https://dx.doi.org/10.1016/j.jpubeco.2005.10.006

William G. Howell and Paul E. Peterson (2006). The Education Gap: Vouchers and Urban Schools, revised edition. Retrieved from https://books.google.com/books?id=lAzmJs8i-rUC

Paul E. Peterson and David E. Campbell (2001). An Evaluation of the Children’s Scholarship Fund (PEPG 01-03). Retrieved from Harvard University website: https://sites.hks.harvard.edu/pepg/PDF/Papers/CSF%20Report%202001.pdf

Patrick J. Wolf, Paul E. Peterson, and Martin R. West (2001). Results of a School Voucher Experiment: The Case of Washington, D.C. after Two Years (PEPG 01–05). Retrieved from https://files.eric.ed.gov/fulltext/ED457272.pdf

Racial/Ethnic Integration

Anna J. Egalite, Jonathan N. Mills, and Patrick J. Wolf (2017). The Impact of Targeted School Vouchers on Racial Stratification in Louisiana Schools. Education and Urban Society, 49(3), pp. 271–296. https://dx.doi.org/10.1177/0013124516643760

Jay P. Greene, Jonathan N. Mills, and Stuart Buck (2010). The Milwaukee Parental Choice Program’s Effect on School Integration (School Choice Demonstration Project Report 20). Retrieved from University of Arkansas Department of Education Reform website: http://www.uaedreform.org/downloads/2010/04/report-20-the-milwaukee-parental-choice-programs-effect-on-school-integration.pdf

Jay P. Greene and Marcus A. Winters (2007). An Evaluation of the Effect of DC’s Voucher Program on Public School Achievement and Racial Integration After One Year. Journal of Catholic Education, 11(1), pp. 83–101. http://dx.doi.org/10.15365/joce.1101072013

Greg Forster (2006). Segregation Levels in Cleveland Public Schools and the Cleveland Voucher Program. School Choice Issues in the State. Retrieved from EdChoice website: http://www.edchoice.org/wp-content/uploads/2015/09/Segregation-Levels-in-Cleveland-Public-Schools-and-the-Cleveland-Voucher-Program.pdf

Greg Forster (2006). Segregation Levels in Milwaukee Public Schools and the Milwaukee Voucher Program. School Choice Issues in the State. Retrieved from EdChoice website: http://www.edchoice.org/wp-content/uploads/2015/09/Segregation-Levels-in-Milwaukee-Public-Schools-and-the-Milwaukee-Voucher-Program.pdf

Howard L. Fuller and George A. Mitchell (2000). The Impact of School Choice on Integration in Milwaukee Private Schools. Current Education Issues 2000-02. Retrieved from https://files.eric.ed.gov/fulltext/ED443939.pdf

Jay P. Greene (1999). Choice and Community: The Racial, Economic and Religious Context of Parental Choice in Cleveland. Retrieved from https://files.eric.ed.gov/fulltext/ED441928.pdf

Fiscal Effects

Dagney Faulk and Michael J. Hicks (2021). School Choice and State Spending on Education in Indiana, Ball State University, Center for Business and Economic Research. Retrieved from: http://projects.cberdata.org/reports/SchoolSpendingChoice-20210611web.pdf

Martin F. Lueken (2021). The Fiscal Effects of Private K-12 Education Choice: Analyzing the costs and savings of private school choice programs in America, , retrieved from: https://www.edchoice.org/wp-content/uploads/2021/11/The-Fiscal-Effects-of-School-Choice-WEB-reduced.pdf

Alexander Nikolov and A. Fletcher Mangum (2021). Scholarship Tax Credits in Virginia: Net Fiscal Impact of Virginia’s Education Improvement Scholarships Tax Credits Program, Mangum Economics. Retrieved from: http://www.thomasjeffersoninst.org/files/3/EISTC%20Study%20FINAL.pdf

Julie R. Trivitt and Corey A. DeAngelis (2020). Dollars and Sense: Calculating the Fiscal Effects of the Louisiana Scholarship Program. Journal of School Choice, https://doi.org/10.1080/15582159.2020.1726704

Heidi Holmes Erickson and Benjamin Scafidi (2020), An Analysis of the Fiscal and Economic Impact of Georgia’s Qualified Education Expense (QEE) Tax Credit Scholarship Program, Education Economics Center, Kennesaw State University, retrieved from: https://coles.kennesaw.edu/education-economics-center/docs/QEE-full-report.pdf

Deborah Sheasby (2020). How the Arizona School Tuition Organization Tax Credits Save the State Money, Arizona Tuition Organization, retrieved from: http://www.azto.org/wp-content/uploads/2020/01/How-the-AZ-STO-Tax-Credits-Save-the-State-Money-1-8-20.pdf

Jacob Dearmon and Russell Evans (2018), Fiscal Impact Analysis of the Oklahoma Equal Opportunity Scholarship Tax Credit, retrieved from Oklahoma City University website: https://www.okcu.edu/uploads/business/docs/Scholarship-Tuition-Tax-Credit-FY-2017-Fiscal-Impact-Report.pdf

Joint Legislative Committee on Performance Evaluation and Expenditure Review (2018), A Statutory Review of Mississippi’s Education Scholarship Account Program (Report 628), retrieved from https://www.peer.ms.gov/Reports/reports/rpt628.pdf

Julie R. Trivitt and Corey A. DeAngelis (2018). State-Level Fiscal Impact of the Succeed Scholarship Program 2017-2018. Arkansas Education Reports, 15(1), pp. 1–21. Retrieved from http://scholarworks.uark.edu/oepreport/1

Wisconsin Legislative Audit Bureau (2018), Special Needs Scholarship Program: Department of Public Instruction (Report 18-6). Retrieved from https://legis.wisconsin.gov/lab/media/2753/18-6full.pdf

Anthony G. Girardi and Angela Gullickson (2017). Iowa’s School Tuition Organization Tax Credits Program Evaluation Study. Retrieved from Iowa Department of Revenue website: https://tax.iowa.gov/sites/files/idr/2017%20STO%20Tax%20Credit%20Evaluation%20Study%20%281%29.pdf

SummaSource (2017). Final Report: Analysis of the Financial Impact of the Alabama Accountability Act, SummaSource at Auburn Mongomery, retrieved from: https://www.federationforchildren.org/wp-content/uploads/2017/02/AUM-Fiscal-Impact-Report.pdf

Corey A. DeAngelis and Julie R. Trivitt (2016). Squeezing the Public School Districts: The Fiscal Effects of Eliminating the Louisiana Scholarship Program (EDRE Working Paper 2016-10). Retrieved from University of Arkansas Department of Education Reform website: http://www.uaedreform.org/downloads/2016/08/squeezing-the-public-school-districts-the-fiscal-effects-of-eliminating-the-louisiana-scholarship-program.pdf

Jeff Spalding (2014). The School Voucher Audit: Do Publicly Funded Private School Choice Programs Save Money? Retrieved from EdChoice website: http://www.edchoice.org/wp-content/uploads/2015/07/The-School-Voucher-Audit-Do-Publicly-Funded-Private-School-Choice-Programs-Save-Money.pdf

Patrick J. Wolf and Michael McShane (2013). Is the Juice Worth the Squeeze? A Benefit/Cost Analysis of the District of Columbia Opportunity Scholarship Program. Education Finance and Policy, 8(1), pp. 74–99. https://dx.doi.org/10.1162/EDFP_a_00083

Legislative Office of Economic and Demographic Research (2012). Revenue Estimating Conference, retrieved from: http://www.edr.state.fl.us/Content/conferences/revenueimpact/archives/2012/pdf/page540-546.pdf

Robert M. Costrell (2010). The Fiscal Impact of the Milwaukee Parental Choice Program: 2010-2011 Update and Policy Options (SCDP Milwaukee Evaluation Report 22). Retrieved from University of Arkansas Department of Education Reform website: http://www.uaedreform.org/downloads/2011/03/report-22-the-fiscal-impact-of-the-milwaukee-parental-choice-program-2010-2011-update-and-policy-options.pdf

John Merrifield and Nathan L. Gray (2009). An Evaluation of the CEO Horizon, 1998–2008, Edgewood Tuition Voucher Program. Journal of School Choice, 3(4), pp. 414–415. https://dx.doi.org/10.1080/15582150903430764

OPPAGA (2008). The Corporate Tax Credit Scholarship Program Saves State Dollars (Report 08-68). Retrieved from http://www.oppaga.state.fl.us/reports/pdf/0868rpt.pdf

Susan L. Aud (2007). Education by the Numbers: The Fiscal Effect of School Choice Programs, 1990-2006. School Choice Issues in Depth. Retrieved from EdChoice website: http://www.edchoice.org/wp-content/uploads/2015/09/Education-by-the-Numbers-Fiscal-Effect-of-School-Choice-Programs.pdf

Susan L. Aud and Leon Michos (2006). Spreading Freedom and Saving Money: The Fiscal Impact of the D.C. Voucher Program. Retrieved from EdChoice website: http://www.edchoice.org/wp-content/uploads/2015/09/Spreading-Freedom-and-Saving-Money-The-Fiscal-Impact-of-the-DC-Voucher-Program.pdf

Note: A study is a unique set of one or more data analyses, published together, of a single school choice program. “Unique” means using data and analytic specifications not identical to those in previously reported studies. “Published” means reported to the public in any type of publication, paper, article or report. By this definition, all data analyses on a single school choice program that are reported in a single publication are taken together as one “study,” but analyses studying multiple programs are taken as multiple studies even if they are published together.

Past Reports

Click the links below to download past editions of The 123s of School Choice.

Endnotes

- EdChoice (2020), Comparing Ed Reforms: Assessing the Experimental Research on Nine K–12 Education Reforms, retrieved from: https://www.edchoice.org/wp-content/uploads/2020/04/comparing-ed-reforms.pdf

- Yujie Sude, Corey A. DeAngelis, and Patrick J. Wolf (2018), Supplying Choice: An Analysis of School Participation Decisions in Voucher Programs in Washington, DC, Indiana, and Louisiana, Journal of School Choice, 12(1), pp. 8–33, https://doi.org/10.1080/15582159.2017.1345232

- Atila Abdulkadiroglu, Parag A. Pathak, and Christopher R. Walters (2018), Free to Choose: Can School Choice Reduce Student Achievement? American Economic Journal: Applied Economics, 10(1), pp. 175– 206, https://dx.doi.org/10.1257/app.20160634

- There is evidence that points to a disconnect between test scores and long-run outcomes. For example, please see: Collin Hitt, Michael Q. McShane, and Patrick J. Wolf (2018), Do Impacts on Test Scores Even Matter? Lessons from Long-Run Outcomes in School Choice Research: Attainment Versus Achievement Impacts and Rethinking How to Evaluate School Choice Programs, retrieved from American Enterprise Institute website: http://www.aei.org/wp-content/uploads/2018/04/Do-Impacts-on-Test-ScoresEven-Matter.pdf; Corey A. DeAngelis (2018), Divergences between Effects on Test Scores and Effects on Non-Cognitive Skills, https://dx.doi.org/10.2139/ssrn.3273422

- James P. Kelly and Benjamin Scafidi (2013). More than Scores: An Analysis of Why and How Parents Choose Private Schools, retrieved from EdChoice webiste: http://www.edchoice.org/wp-content/uploads/2015/07/More-Than-Scores.pdf

- Gertrude Stein (1922), Geography and Plays

- Collin Hitt, Michael Q. McShane, and Patrick J. Wolf (2018), Do Impacts on Test Scores Even Matter? Lessons from Long-Run Outcomes in School Choice Research: Attainment Versus Achievement Impacts and Rethinking How to Evaluate School Choice Programs, retrieved from American Enterprise Institute website: http://www.aei.org/wp-content/uploads/2018/04/Do-Impacts-on-Test-Scores-Even-Matter.pdf; Corey A. DeAngelis (2018), Divergences between Effects on Test Scores and Effects on Non-Cognitive Skills, https://dx.doi.org/10.2139/ssrn.3273422

- What Works Clearinghouse (2014), Procedures and Standards Handbook: Version 3.0, retrieved from Institute of Education Sciences website: https://ies.ed.gov/ncee/wwc/docs/referenceresources/wwc_procedures_v3_0_standards_handbook.pdf

- M. Danish Shakeel, Kaitlin P. Anderson, and Patrick J. Wolf (2016). The Participant Effects of Private School Vouchers across the Globe: A Meta-Analytic and Systematic Review (EDRE Working Paper 2016-07), https://dx.doi.org/10.2139/ssrn.2777633. For more information, see: University of Arkansas, Overall TOT Impacts by Year – US Voucher RCTs: Math [Image file], accessed March 18, 2020, retrieved from http://www.uaedreform.org/downloads/2017/04/u-s-voucher-experiments-math-impacts-by-year.png; University of Arkansas, Overall TOT Impacts by Year – US Voucher RCTs: Reading [Image file], accessed March 18, 2020, retrieved from http://www.uaedreform.org/downloads/2017/04/u-s-voucher-experiments-reading-impacts-by-year.png

- Joseph Waddington and Mark Berends (2018), Impact of the Indiana Choice Scholarship Program: Achievement Effects for Students in Upper Elementary and Middle School, Journal of Policy Analysis and Management, 37(4), pp. 783–808, https://dx.doi.org/10.1002/pam.22086; David Figlio and Krzysztof Karbownik (2016), Evaluation of Ohio’s EdChoice Scholarship Program: Selection, Competition, and Performance Effects, retrieved from Thomas B. Fordham Institute website: https://fordhaminstitute.org/sites/default/files/publication/pdfs/FORDHAM-Ed-Choice-Evaluation-Report_online-edition.pdf

- Patrick J. Wolf (2012), The Comprehensive Longitudinal Evaluation of the Milwaukee Parental Choice Program: Summary of Final Reports (SCDP Milwaukee Evaluation Report 36), retrieved from University of Arkansas Department of Education Reform website: http://www.uaedreform.org/downloads/2012/02/report-36-the-comprehensive-longitudinal-evaluation-of-the-milwaukee-parental-choice-program.pdf

- Leesa M. Foreman (2017), Educational Attainment Effects of Public and Private School Choice, Journal of School Choice, 11(4), pp. 642–654, https://dx.doi.org/10.1080/15582159.2017.1395619

- Evan Rhinesmith (2017), A Review of the Research on Parent Satisfaction in Private School Choice Programs, Journal of School Choice, 11(4), p. 600, https://dx.doi.org/10.1080/15582159.2017.1395639

- Dennis Epple, Richard E. Romano, and Miguel Urquiola (2017), School Vouchers: A Survey of the Economics Literature, Journal of Economic Literature, 55(2), p. 441, https://dx.doi.org/10.1257/jel.20150679 Patrick J. Wolf and Anna J. Egalite (2016), Pursuing Innovation: How Can Educational Choice Transform K–12 Education in the U.S.?, p. 1, retrieved from EdChoice website: http://www.edchoice.org/wp-content/uploads/2016/05/2016-4-Pursuing-Innovation-WEB-1.pdf Anna J. Egalite and Patrick J. Wolf (2016), A Review of the Empirical Research on Private School Choice, Peabody Journal of Education, 91(4), p. 451, https://dx.doi.org/10.1080/0161956X.2016.1207436 Anna J. Egalite (2013), Measuring Competitive Effects from School Voucher Programs: A Systematic Review, Journal of School Choice, 7(4), p. 443, https://dx.doi.org/10.1080/15582159.2013.837759

- Corey A. DeAngelis (2017), Do Self-Interested Schooling Selections Improve Society? A Review of the Evidence, Journal of School Choice, 11(4), pp. 546–558, https://dx.doi.org/10.1080/15582159.2017.1395615

- Elise Swanson (2017), Can We Have It All? A Review of the Impacts of School Choice on Racial Integration, Journal of School Choice, 11(4), p. 523, https://dx.doi.org/10.1080/15582159.2017.1395644